While healthcare and computer science may appear divergent subjects in the abstract, they become closer every day in reality. Throughout the history of medicine, technology has been regularly used to enhance the capabilities of humans. The first stethoscope was used in 1816 to listen to a human heart. In 1896, the first X-ray was used to examine a fractured wrist from ice skating. Today the array of technologies used to “collect data” on human health is diverse and replete. But computers can do more than just “collect data.” For specific use cases, they can process it at a speed and accuracy better than clinicians; moreover, computer-processed data lends itself to highlighting relationships between data elements and showcasing clinical insights previously unfathomable.

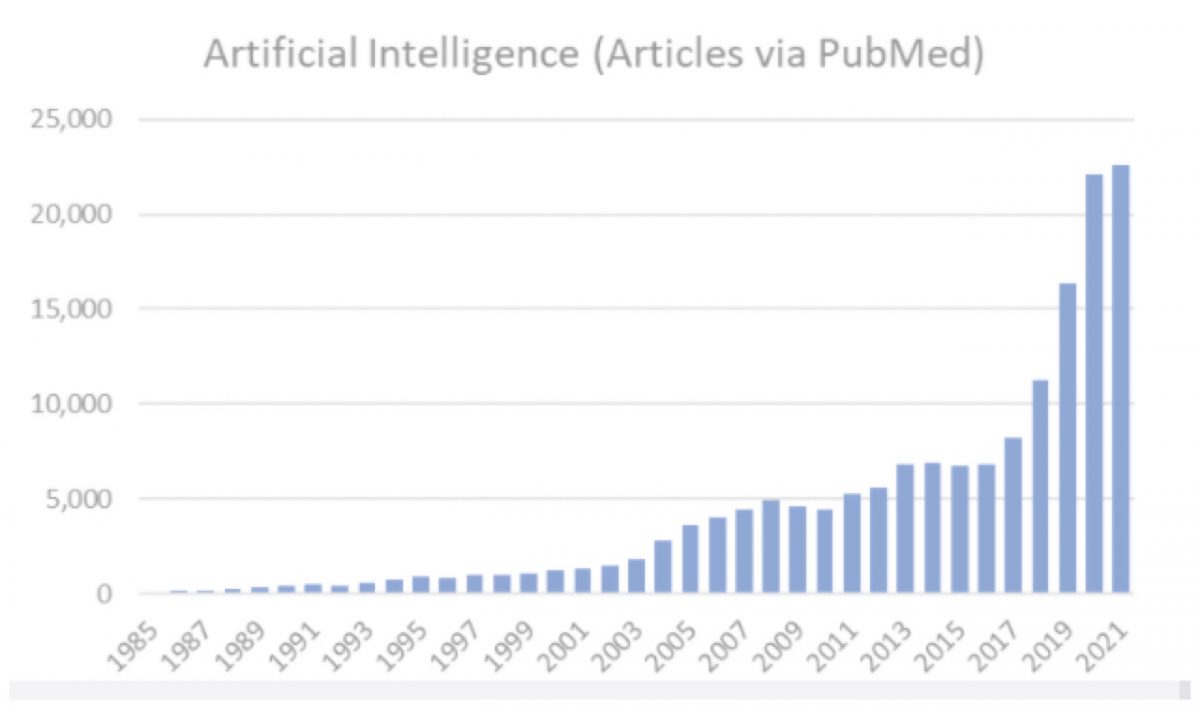

One early investigation of medical artificial intelligence, dating back to 1979, was an expert system capable of selecting disease-specific antibiotics for bacterial infections. That tool, called MYCIN, articulated how through data relationship evaluation, computers could perform at or above the levels of infectious disease experts. While the potential was there, healthcare through the early 2000s recorded most information in paper format, locking critical data in a state inaccessible to programs for medical artificial intelligence. In the past decade, however, we have moved to an ecosystem where electronic health records (EHRs) digitally store data and communicate that information broadly according to standards. This has unlocked a new era of innovation and ingenuity of machine learning and artificial intelligence in medicine. Over the past 20 years, the volume and velocity of research and applications of medical artificial intelligence has exploded and there is no sign of it slowing down!

As the field of medical artificial intelligence has matured, a critical need to standardize how machine learnings are recorded and transmitted has emerged. This need stems from the realities of patient care. First, the observations of machines need to be flagged independently of findings asserted by humans. Next, machine-made inferences are able to communicate probabilistic certainty in quantitative terms. While humans seldom record the confidence of a diagnosis or other observation (e.g., the patient has an 82% chance of actually being a diabetic), certainty from artificial intelligence can be quantified by the strength of the model and the inputs of the algorithm used to make the inference. Finally, machine learnings are highly referential, requiring links to data sources and types to make the process of computation transparent and verifiable.

In the paper FHIR-Enabled AI: A proposal for standards for output from AI, Sam Schifman, Chief Architect at Diameter Health and his colleagues Paulo Pinho, MD and Christopher Vitale, PharmD, propose how the issues described above can be meaningfully addressed using the Fast Healthcare Interoperability Resource (FHIR) standard. The FHIR standard has been developed by HL7, the leading organization for healthcare standards, and represents a logical starting place for machine learning and artificial intelligence standards.

I’ve had the pleasure to work with all the authors on the piece, and can say, that their perspectives are invaluable. I encourage you to read and learn one more way that healthcare and computer science are being united in the pursuit of more effective and higher quality care.